Spatial navigation : RL-base navigation algorithm with hippocampus place cells

The sense of place and the ability to navigate are some of the main brain functions. Those spatial memory gives a perception of the position of the body in the environment and in relation to surrounding objects. Most animals have abilities to form spatial sense for self-positioning and navigation depends on the hippocampus. Place cells are the main neurons in the hippocampus which activate when the animal is in a specific position. Different place cells are active at different locations and form place fields from environmental cues. O’Keefe (1978)concluded that place cells provide the brain with a spatial reference map system, or a sense of place. Due to combinations of activity in different place cells that were active at different times in different environments, place cells encode spatial information during navigation using neural activities or neural firing rates. In terms of modeling navigation of place cells, there were many approaches that are able to explain the formation of hippocampal representations, among which some intended to solve spatial learning or navigation in the environment. Numerous experiments have demonstrated the essential role of hippocampus cells in solving navigational tasks including goal-directed navigation , and that can be simply concluded as the process of moving from a starting location to a goal location, where the whole navigation process is driven by the goal. This mechanism is consistent with reinforcement learning(RL), learning navigational polices during interacting with the environment, and the focus is on finding a balance between exploration states and selected actions.However, despite great successes in many models, standard RL methods show low learning efficiency and low predicted accuracy in environments with sparse and delayed reward and do not apply to larger or complex tasks.Furthermore, the neural signals of the electrophysiological recording often can’t represent the whole neural activity of the entire brain, and the stability of the electrode changes with time affects the quality of the neural signals.The lack of neural signals makes the place fields unable to cover the entire space, and fewer the state also affects the accuracy of RL-based algorithm.

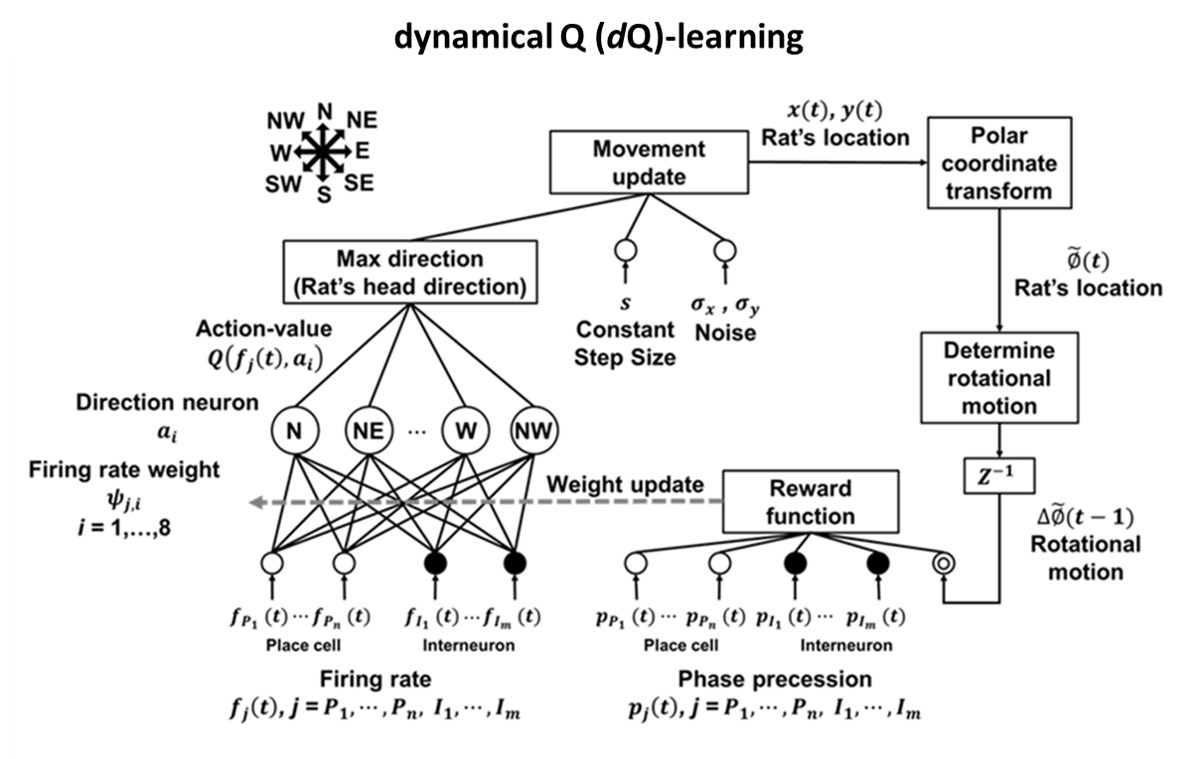

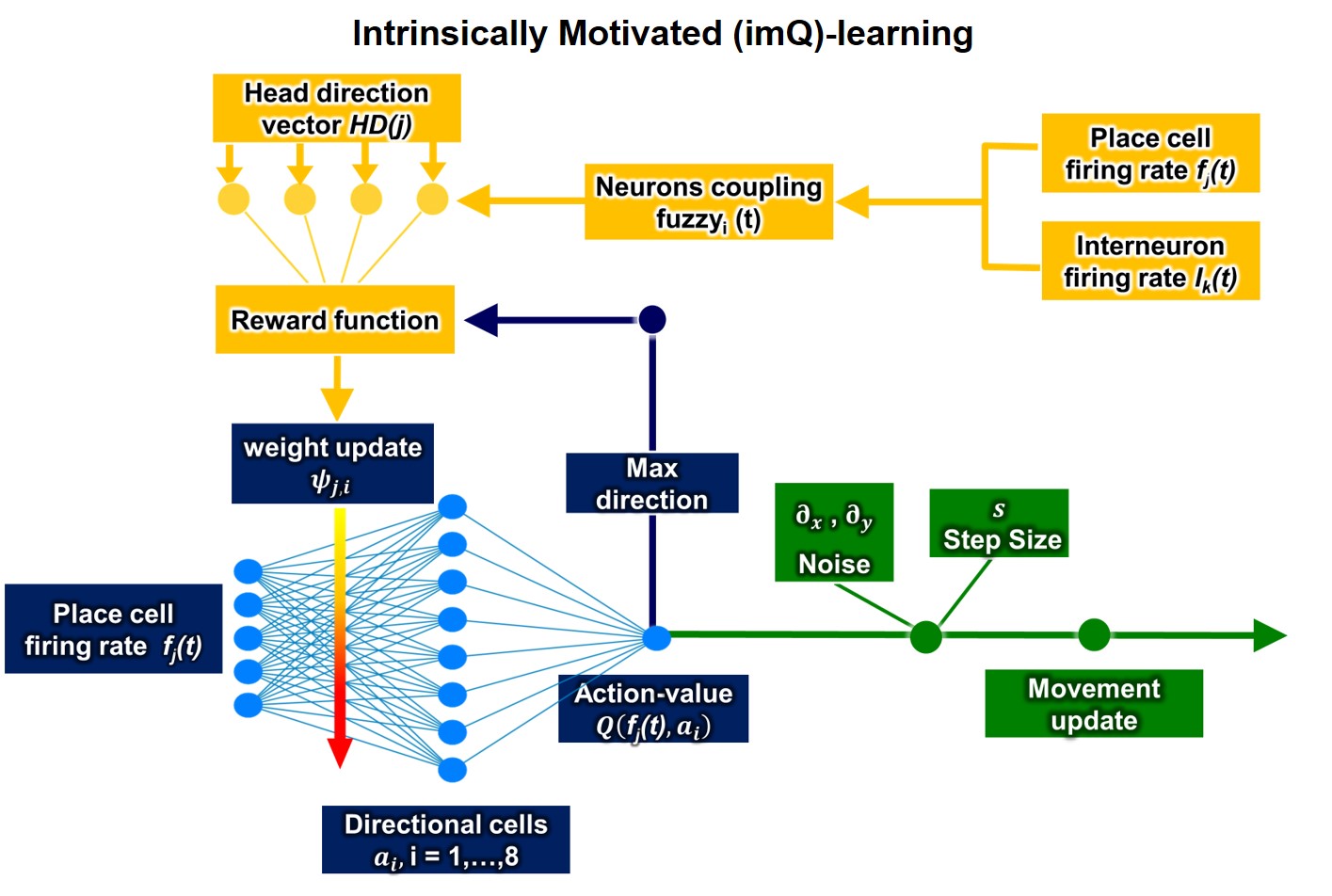

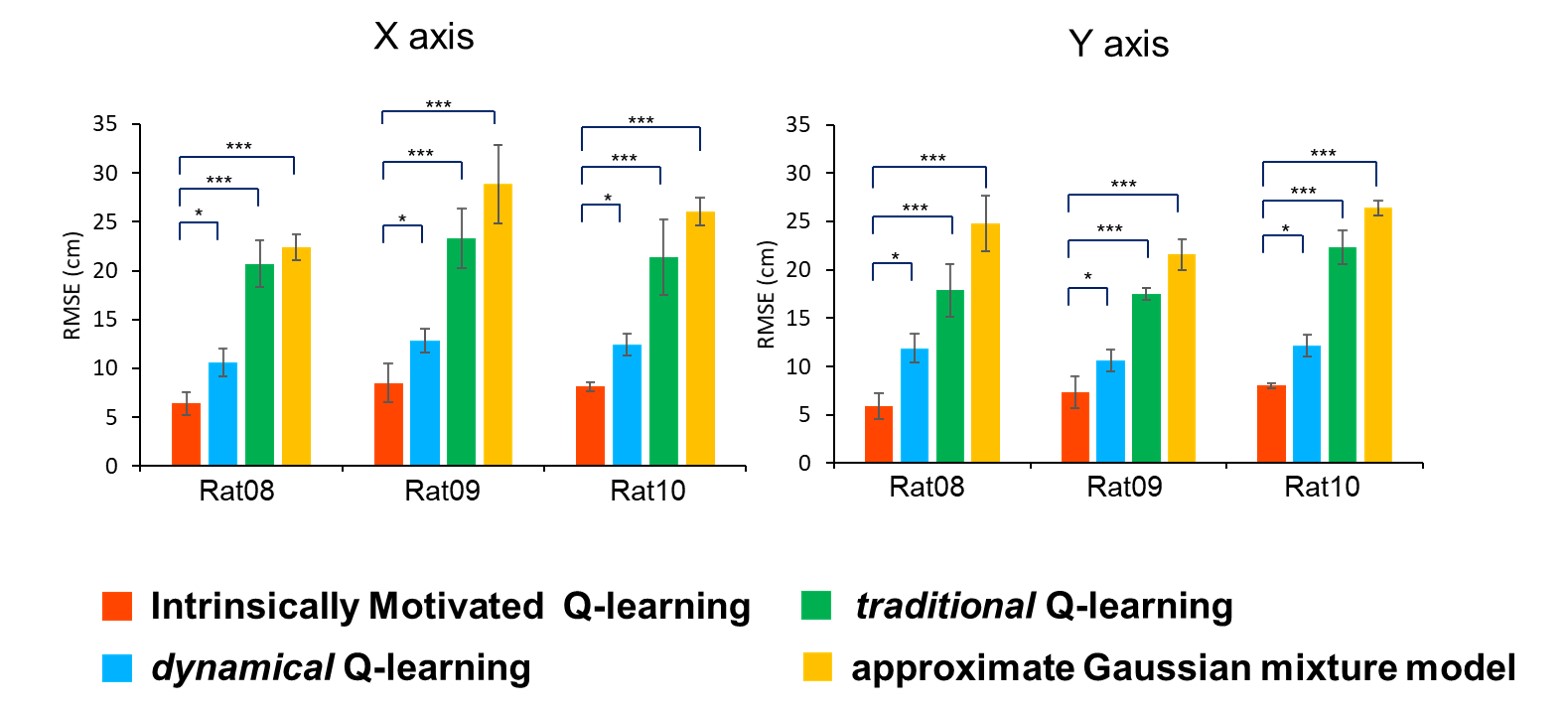

In order to achieve better accuracy of trajectory decoding, we focus on two goals. First,using the navigation information contained in the neural signals as the basis for adjusting the reward function, which instead of manually defining the reward value to improve irrationality and time-consuming. Second, we regulated the reward mechanism in a way that conforms to the biological mechanism,including dynamically updated rewards function and a hierarchical reward function that combines intrinsic and external rewards.Through these methods, the richness of the reward values were significantly increased and improved the accuracy of trajectory predictions. Therefore, our research combines the spatial characteristics of the place cells and internerurons and related direction information with the RL algorithm to decode the movement trajectory of the rat in environment. We propose a revised version of the RL algorithm, dynamical Q (dQ)-learning , which assigns the reward function adaptively to improve decoding performance.In dQ-learning,we empolyed phase precession as an input for reward function to enable a reward at each moment, which might improve the convergence rate and prediction accuracy.In order to get better decoding performance, we then built the Intrinsically Motivated (imQ)-learning which establish hierarchical reward function by external reward from head-direction turning of place cells and intrinsic reward from relations between interneuron-place cell coupling. ImQ-learning integrated CA1 navigation related neural signals and adjust the reward value by high and low level observations, which can avoid the interference of white noises and improved the accuracy of trajectory decoding and theconvergence rate of model.Overall, our study demonstrated significant improvement of RL-based navigation model of place cell and obviously enhanced the accuracy of predicted trajectory.

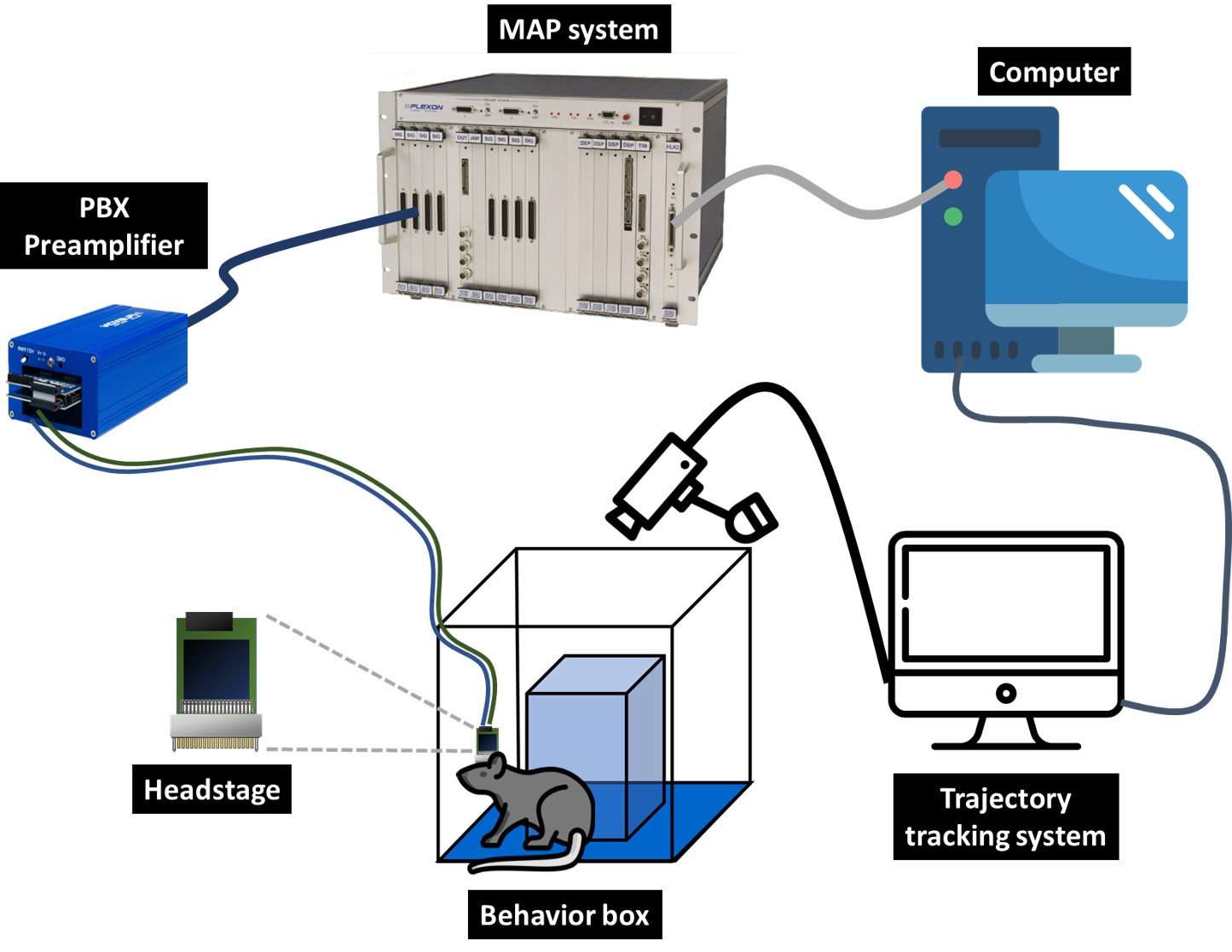

Fig2. The architecture of the electrophysiological neuron recording system and the animal trajectory recording system with the setting of the experimental environment for water reward-related lever-pressing learning task.

Fig3. The architecture of dynamical Q-learning

Fig4. The architecture of intrinsically motivated Q-learning

Fig5. The performance comparison of different navigation models. The data were based on RMSE between real trajectory and predected trajectory in X axis or Y axis.

Designed by: NTK Lab © Copyright 2020. All Rights Reserved.